My solution to common issues with the bloom post processing effect

1. Overview

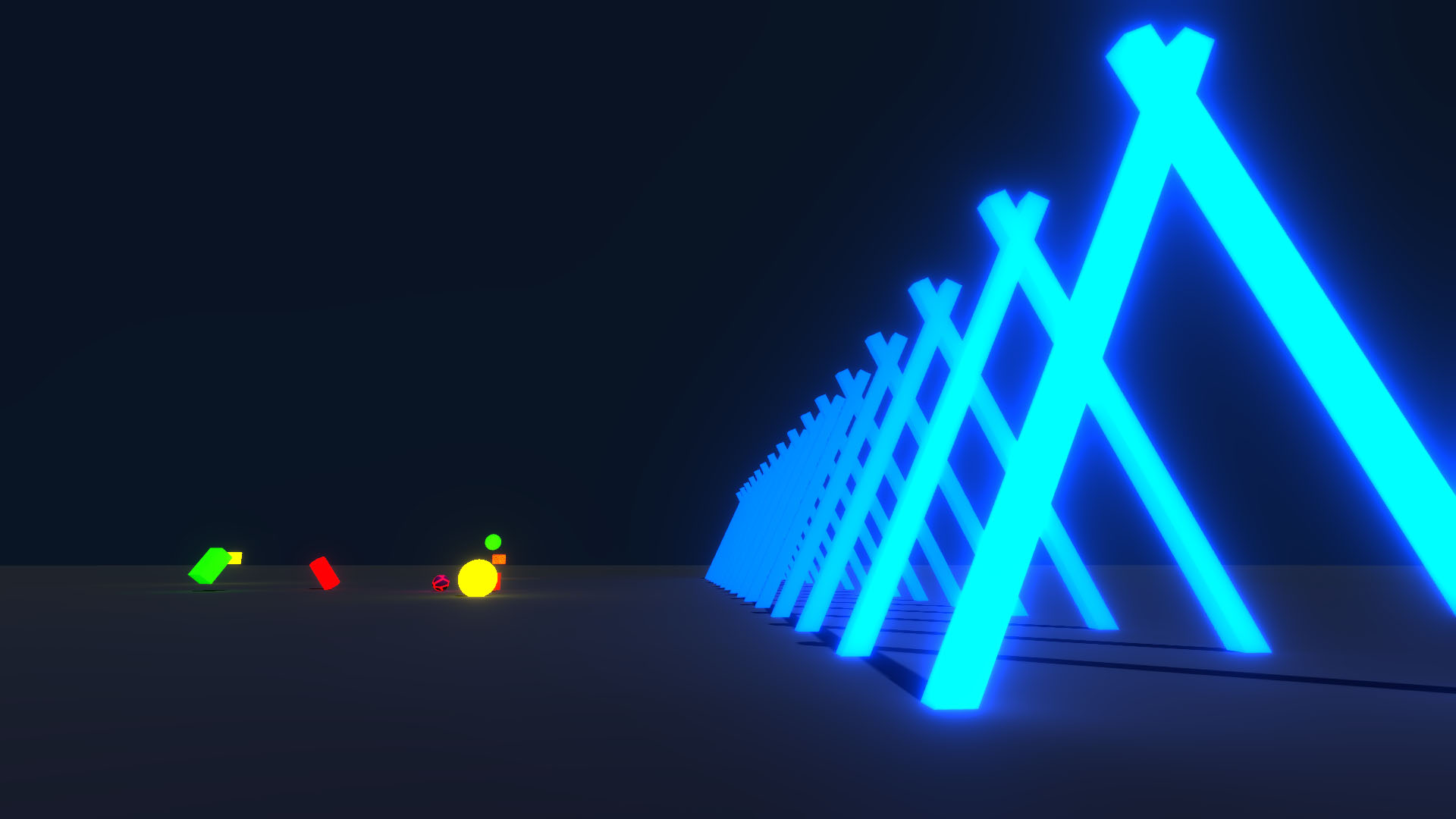

This is my custom shader implementation of bloom to make the popular post processing effect more appealing. The shader simulates light fall off in the post processing step by using the camera depth texture as a bloom intensity multiplier, I call this solution “bloom attenuation”. This effectively prevents bloom from oversaturating the screen and better differentiates bright objects with different distances from the player camera. Try the playable tech demo to change the bloom shader parameters and see the individual bloom rendering steps in real time!

See video below for a full project explanation.

2. Highlights

- Novel bloom solution, implemented from scratch

- Extensive Cg/HLSL shader and graphics rendering work, with C# programming for demo game logic

- Playable Unity tech demo

- Adjust all bloom shader parameters and see individual rendering steps with a custom UI in real time

- Experience the effect with a custom first person controller

- On rails demo camera (as shown in video) to adjust parameters on the move

- Project overview video

- Scripting, video editing, and narration

- Full explanation of how post processing bloom works, with animated diagrams

- Tech and game design analysis of bloom use in other games

3. Implementation

This project was done in Unity 2019, using the built-in render pipeline. To apply the depth bloom intensity multiplier, post processing bloom had to be implemented from scratch. This is done with a Cg/HLSL multi-pass bloom shader applied to the main camera’s final image using Unity’s OnRenderImage() function in C#.

Snippet of Custom Bloom Shader

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

half3 Sample(float2 uv) {

return tex2D(_MainTex, uv).rgb;

}

half3 SampleBox(float2 uv, float delta) {

float4 o = _MainTex_TexelSize.xyxy * float2(-delta, delta).xxyy;

half3 s =

Sample(uv + o.xy) + Sample(uv + o.zy) +

Sample(uv + o.xw) + Sample(uv + o.zw);

return s * 0.25f;

}

half3 Prefilter(half3 c) {

half brightness = max(c.r, max(c.g, c.b)); // brightness is max of color channels

half soft = brightness - _Filter.y;

soft = clamp(soft, 0, _Filter.z);

soft = soft * soft * _Filter.w;

half contribution = max(soft, brightness - _Filter.x);

contribution /= max(brightness, 0.00001);

return c * contribution;

}

float GetDistanceFromCamera(float2 uv) {

return tex2D(_DepthTexture, uv);

}

SubShader{

Cull Off

ZTest Always

ZWrite On

Pass { // 0 - Prefilter + first downsample

CGPROGRAM

#pragma vertex VertexProgram

#pragma fragment FragmentProgram

half4 FragmentProgram(Interpolators i) : SV_Target{

return max(lerp(1, GetDistanceFromCamera(i.sPos), _Attenuation), _MaxBloomReduction) * half4(Prefilter(SampleBox(i.uv, 1)), 1);

}

ENDCG

}

Pass { // 1 - downsample

CGPROGRAM

#pragma vertex VertexProgram

#pragma fragment FragmentProgram

half4 FragmentProgram(Interpolators i) : SV_Target{

return half4(SampleBox(i.uv, 1), 1);

}

ENDCG

}

Pass { // 2 - upsample

Blend One One // additive blend

CGPROGRAM

#pragma vertex VertexProgram

#pragma fragment FragmentProgram

half4 FragmentProgram(Interpolators i) : SV_Target {

return half4(SampleBox(i.uv, 0.5), 1);

}

ENDCG

}

Pass { // 3 - final upsample + bloom addition

CGPROGRAM

#pragma vertex VertexProgram

#pragma fragment FragmentProgram

half4 FragmentProgram(Interpolators i) : SV_Target {

half4 c = tex2D(_SourceTex, i.uv);

c.rgb += _Intensity * SampleBox(i.uv, 0.5);

return c;

}

ENDCG

}

Pass { // 4 - Bloom Debug

CGPROGRAM

#pragma vertex VertexProgram

#pragma fragment FragmentProgram

half4 FragmentProgram(Interpolators i) : SV_Target {

return _Intensity * half4(SampleBox(i.uv, 0.5), 1);

}

ENDCG

}

Pass { // 5 - Depth Debug

Tags { "RenderType" = "Opaque" }

CGPROGRAM

#pragma vertex VertexProgram

#pragma fragment FragmentProgram

half4 FragmentProgram(Interpolators i) : SV_Target {

return GetDistanceFromCamera(i.sPos);

}

ENDCG

}

}

Note: Bloom attenuation is applied in line 38, a max(...) function with a tweakable _MaxBloomReduction variable is added so very far objects can still have bloom if desired (e.g. the moon). See video for full details.

Applying the Screen Shader in C#

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

private void OnRenderImage(RenderTexture source, RenderTexture destination) {

if(bloom == null) {

bloom = new Material(bloomShader);

bloom.hideFlags = HideFlags.HideAndDontSave;

}

bloom.SetFloat("_Attenuation", attenuation);

bloom.SetFloat("_MaxBloomReduction", maxBloomReduction);

depthTextureRender.UpdateDepthCamFarPlaneScale(attenuationDistanceScale);

if(isDebug_showDepthOnly) {

Graphics.Blit(source, destination, bloom, DebugDepthPass);

return;

}

float knee = threshold * softThreshold;

Vector4 filter;

filter.x = threshold;

filter.y = filter.x - knee;

filter.z = 2f * knee;

filter.w = 0.25f / (knee + 0.00001f);

bloom.SetVector("_Filter", filter);

bloom.SetFloat("_Intensity", intensity);

bloom.SetTexture("_DepthTexture", depthTexture);

int width = source.width / 2;

int height = source.height / 2;

RenderTextureFormat format = source.format;

// First downsampling iteration

RenderTexture currentDestination = RenderTexture.GetTemporary(width, height, 0, format);

textures[0] = currentDestination;

Graphics.Blit(source, currentDestination, bloom, BoxDownPrefilterPass);

RenderTexture currentSource = currentDestination;

// Progressive Downsampling

int i = 1;

for(; i < iterations; i++) {

width /= 2;

height /= 2;

if(height < 2) {

break;

}

currentDestination = RenderTexture.GetTemporary(width, height, 0, format);

textures[i] = currentDestination;

Graphics.Blit(currentSource, currentDestination, bloom, BoxDownPass);

currentSource = currentDestination;

}

// Progressive Upsampling

for(i -= 2; i >= 0; i--) {

currentDestination = textures[i];

textures[i] = null;

Graphics.Blit(currentSource, currentDestination, bloom, BoxUpPass);

RenderTexture.ReleaseTemporary(currentSource);

currentSource = currentDestination;

}

if(isDebug_showBloomContributionOnly) {

Graphics.Blit(currentSource, destination, bloom, DebugBloomPass);

}

else {

bloom.SetTexture("_SourceTex", source);

Graphics.Blit(currentSource, destination, bloom, FinalBloomUpPass);

}

RenderTexture.ReleaseTemporary(currentSource);

}

3.1 Other Shaders

Depth Camera Shader

Since we are using the default forward renderer, a second camera is needed to render depth into a render texture. This texture is sampled in the bloom shader as the attenuation multiplier. Having a second camera also allows us to fine tune the attenuation distance scale (i.e. set the distance where attenuation is max, with zero bloom) by setting the far plane distance of the depth camera independently from the main camera. This can be adjusted in real time with the “Distance Scale” parameter in the tech demo.

1

2

3

half4 frag(v2f i) : SV_Target {

return 1 - Linear01Depth(tex2Dproj(_CameraDepthTexture, UNITY_PROJ_COORD(i.scrPos)));

}

Linear depth was used for this tech demo for simplicity, but the default non-linear depth would perhaps provide a more realistic inverse square law approximation for light attenuation. The depth value is also inversed since the Unity render engine uses reversed z values.

Moon Billboard

The moon in the tech demo is rendered as a relatively far away billboard sprite. This is done as opposed to using a skybox texture so we can better fine tune bloom intensity and make it look like a far away object at the same time.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

v2f vert(appdata v) {

v2f o;

o.pos = UnityObjectToClipPos(v.vertex);

o.uv = v.uv.xy;

// billboard mesh towards camera

float3 vpos = mul((float3x3)unity_ObjectToWorld, v.vertex.xyz);

float4 worldCoord = float4(unity_ObjectToWorld._m03, unity_ObjectToWorld._m13, unity_ObjectToWorld._m23, 1);

float4 viewPos = mul(UNITY_MATRIX_V, worldCoord) + float4(vpos, 0);

float4 outPos = mul(UNITY_MATRIX_P, viewPos);

o.pos = outPos;

o.vertColor = v.vertColor;

return o;

}